HTTP/1.1 503 Service Unavailable

On Azure we must design with cost of operation and service constraints. I recently had an interesting event where my REST Service, deployed on a small (A1) cloud service instance, started to respond with HTTP Status Code 503 Service Unavailable.

The server is currently unable to handle the request due to a temporary overloading or maintenance of the server. The implication is that this is a temporary condition which will be alleviated after some delay. If known, the length of the delay MAY be indicated in a Retry-After header. If no Retry-After is given, the client SHOULD handle the response as it would for a 500 response. [Source: HTTP Status Code Definitions]

Faced with this interesting challenged I started looking in the usual places, which include SQL Database metrics, Azure Storage Metrics, application logs and performance counters.

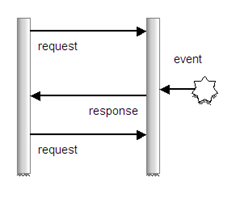

Throughout my investigation, I noticed that the service was hit on average 1.5 million times a day. Then, I noticed that the open socket count was quite high. Trying to make some sense out of the situation, I started identifying resource contentions.

Looking at the application logs didn’t yield much information about why the network requests were piling up, but it did hint at internal process slowdowns following peak loads.

Working on extracting logs from Azure Table Storage I finally got a break. I noticed that the service was generating 5 to 10 megabytes of application logs per minute. To put this into perspective, the service requires enough IO to respond to consumer requests, to push performance counter data to Azure storage, to persist application logs to Azure storage and enough IO capacity to satisfy the application’s need to interact with external resources.

Base on my observations I came to the conclusion that my resource contention was around IO. Now, I rarely recommend scaling up, but in this case it made sense because the service move lots of data around. One solution would have been to turn off telemetry. But doing so would have made me as comfortable as if I were flying a jumbo jet with a blindfold on. In other words, this isn’t something I want to consider because I believe that telemetry is crucial when it comes to understanding how an application behaves on the cloud.

Just to be clear, scaling up worked in this situation, but it may not resolve all your issues. There are times when we need to tweak IIS configurations to make better use of resources and to handle large amounts of requests.Scaling up should remain the last option of your list.

Related Posts

A little while ago the

A little while ago the  Finding the problem, of controlling access to specific resources for a certain amount of time and for a certain amount of downloads, to be quite interesting. So I decided to design a solution that would take advantage of the services made available by the

Finding the problem, of controlling access to specific resources for a certain amount of time and for a certain amount of downloads, to be quite interesting. So I decided to design a solution that would take advantage of the services made available by the